许多文档包含多种内容类型,包括文本和图像。

然而,在大多数 RAG 应用中,图像中捕获的信息都会丢失。

随着多模态LLMs的出现,比如GPT-4V,如何在RAG中利用图像是值得考虑的。

本篇指南的亮点是:

- 使用非结构化来解析文档 (PDF) 中的图像、文本和表格。

- 使用多模态嵌入(例如 CLIP)来嵌入图像和文本

- 使用 VDMS 作为支持多模式的矢量存储

- 使用相似性搜索检索图像和文本

- 将原始图像和文本块传递到多模式 LLM 以进行答案合成

Packages

对于 unstructured ,您的系统中还需要 poppler (安装说明)和 tesseract (安装说明)。

# (newest versions required for multi-modal)

! pip install --quiet -U vdms langchain-experimental

# lock to 0.10.19 due to a persistent bug in more recent versions

! pip install --quiet pdf2image "unstructured[all-docs]==0.10.19" pillow pydantic lxml open_clip_torch

启动VDMS服务器

让我们使用端口 55559 而不是默认的 55555 启动 VDMS docker。记下端口和主机名,因为矢量存储需要这些端口和主机名,因为它使用 VDMS Python 客户端连接到服务器。

! docker run --rm -d -p 55559:55555 --name vdms_rag_nb intellabs/vdms:latest

# Connect to VDMS Vector Store

from langchain_community.vectorstores.vdms import VDMS_Client

vdms_client = VDMS_Client(port=55559)

docker: Error response from daemon: Conflict. The container name

“/vdms_rag_nb” is already in use by container

“0c19ed281463ac10d7efe07eb815643e3e534ddf24844357039453ad2b0c27e8”.

You have to remove (or rename) that container to be able to reuse that

name. See ‘docker run --help’.

# from dotenv import load_dotenv, find_dotenv

# load_dotenv(find_dotenv(), override=True);

数据加载

分割 PDF 文本和图像

让我们看一个包含有趣图像的 pdf 示例。

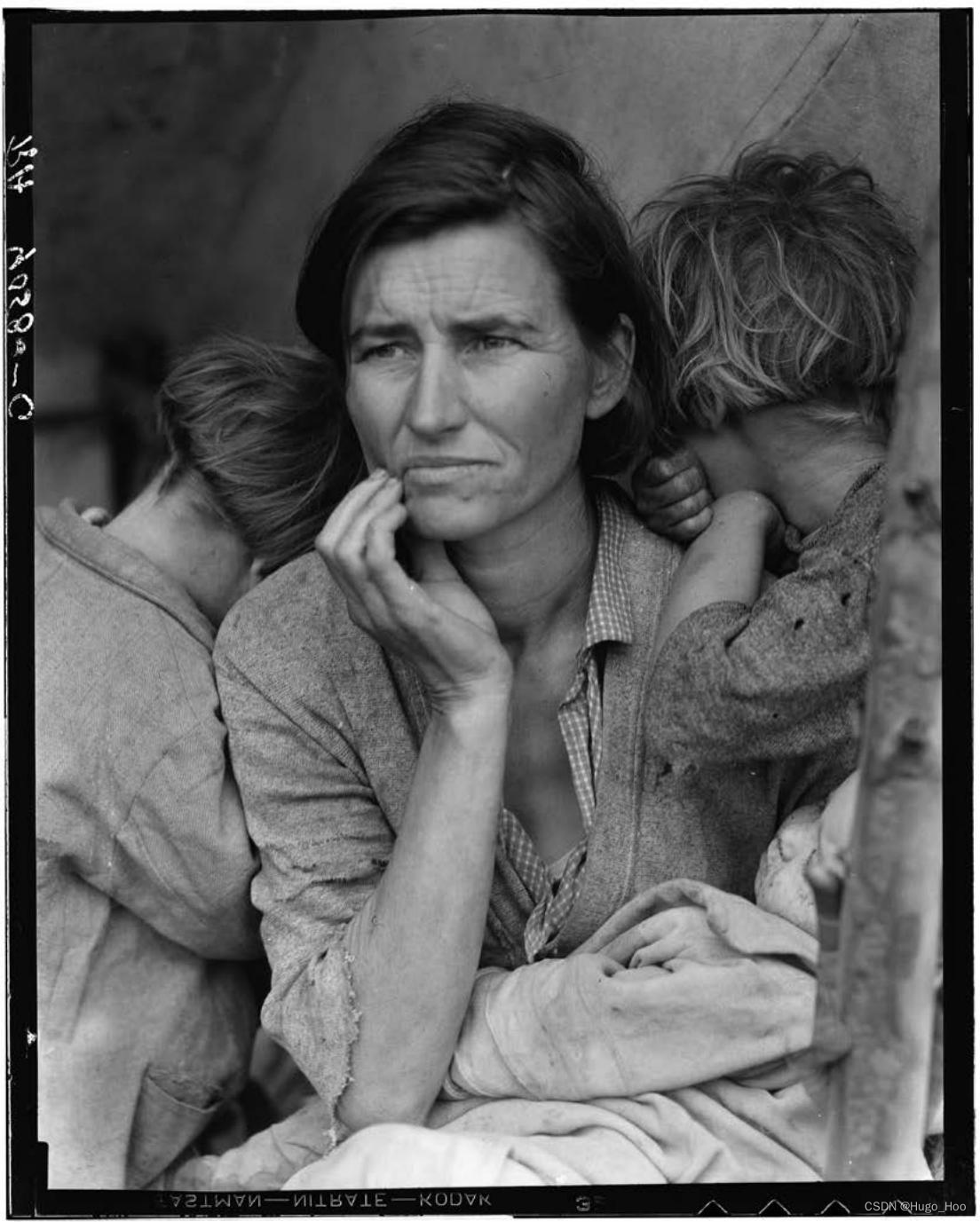

国会图书馆的著名照片:

- https://www.loc.gov/lcm/pdf/LCM_2020_1112.pdf

- 我们将在下面使用它作为示例

我们可以使用下面的 Unstructed 中的 partition_pdf 来提取文本和图像。

from pathlib import Path

import requests

# Folder with pdf and extracted images

datapath = Path("./multimodal_files").resolve()

datapath.mkdir(parents=True, exist_ok=True)

pdf_url = "https://www.loc.gov/lcm/pdf/LCM_2020_1112.pdf"

pdf_path = str(datapath / pdf_url.split("/")[-1])

with open(pdf_path, "wb") as f:

f.write(requests.get(pdf_url).content)

# Extract images, tables, and chunk text

from unstructured.partition.pdf import partition_pdf

raw_pdf_elements = partition_pdf(

filename=pdf_path,

extract_images_in_pdf=True,

infer_table_structure=True,

chunking_strategy="by_title",

max_characters=4000,

new_after_n_chars=3800,

combine_text_under_n_chars=2000,

image_output_dir_path=datapath,

)

datapath = str(datapath)

# Categorize text elements by type

tables = []

texts = []

for element in raw_pdf_elements:

if "unstructured.documents.elements.Table" in str(type(element)):

tables.append(str(element))

elif "unstructured.documents.elements.CompositeElement" in str(type(element)):

texts.append(str(element))

我们的文档的多模态嵌入

我们将使用 OpenClip 多模态嵌入。

我们使用更大的模型以获得更好的性能(在 langchain_experimental.open_clip.py 中设置)。

model_name = "ViT-g-14"

checkpoint = "laion2b_s34b_b88k"

import os

from langchain_community.vectorstores import VDMS

from langchain_experimental.open_clip import OpenCLIPEmbeddings

# Create VDMS

vectorstore = VDMS(

client=vdms_client,

collection_name="mm_rag_clip_photos",

embedding_function=OpenCLIPEmbeddings(

model_name="ViT-g-14", checkpoint="laion2b_s34b_b88k"

),

)

# Get image URIs with .jpg extension only

image_uris = sorted(

[

os.path.join(datapath, image_name)

for image_name in os.listdir(datapath)

if image_name.endswith(".jpg")

]

)

# Add images

if image_uris:

vectorstore.add_images(uris=image_uris)

# Add documents

if texts:

vectorstore.add_texts(texts=texts)

# Make retriever

retriever = vectorstore.as_retriever()

RAG

vectorstore.add_images 将存储/检索图像作为 base64 编码字符串。

import base64

from io import BytesIO

from PIL import Image

def resize_base64_image(base64_string, size=(128, 128)):

"""

Resize an image encoded as a Base64 string.

Args:

base64_string (str): Base64 string of the original image.

size (tuple): Desired size of the image as (width, height).

Returns:

str: Base64 string of the resized image.

"""

# Decode the Base64 string

img_data = base64.b64decode(base64_string)

img = Image.open(BytesIO(img_data))

# Resize the image

resized_img = img.resize(size, Image.LANCZOS)

# Save the resized image to a bytes buffer

buffered = BytesIO()

resized_img.save(buffered, format=img.format)

# Encode the resized image to Base64

return base64.b64encode(buffered.getvalue()).decode("utf-8")

def is_base64(s):

"""Check if a string is Base64 encoded"""

try:

return base64.b64encode(base64.b64decode(s)) == s.encode()

except Exception:

return False

def split_image_text_types(docs):

"""Split numpy array images and texts"""

images = []

text = []

for doc in docs:

doc = doc.page_content # Extract Document contents

if is_base64(doc):

# Resize image to avoid OAI server error

images.append(

resize_base64_image(doc, size=(250, 250))

) # base64 encoded str

else:

text.append(doc)

return {"images": images, "texts": text}

目前,我们使用 RunnableLambda 格式化输入,同时向 ChatPromptTemplates 添加图像支持。

我们的可运行程序遵循经典的 RAG 流程 -

-

我们首先计算上下文(在本例中是“文本”和“图像”)和问题(这里只是一个 RunnablePassthrough)

-

然后我们将其传递到提示模板中,这是一个自定义函数,用于格式化 llava 模型的消息。

-

最后我们将输出解析为字符串。

在这里,我们使用 Ollama 来服务 Llava 模型。请参阅 Ollama 了解设置说明。

from langchain_community.llms.ollama import Ollama

from langchain_core.messages import HumanMessage

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnableLambda, RunnablePassthrough

def prompt_func(data_dict):

# Joining the context texts into a single string

formatted_texts = "\n".join(data_dict["context"]["texts"])

messages = []

# Adding image(s) to the messages if present

if data_dict["context"]["images"]:

image_message = {

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{data_dict['context']['images'][0]}"

},

}

messages.append(image_message)

# Adding the text message for analysis

text_message = {

"type": "text",

"text": (

"As an expert art critic and historian, your task is to analyze and interpret images, "

"considering their historical and cultural significance. Alongside the images, you will be "

"provided with related text to offer context. Both will be retrieved from a vectorstore based "

"on user-input keywords. Please convert answers to english and use your extensive knowledge "

"and analytical skills to provide a comprehensive summary that includes:\n"

"- A detailed description of the visual elements in the image.\n"

"- The historical and cultural context of the image.\n"

"- An interpretation of the image's symbolism and meaning.\n"

"- Connections between the image and the related text.\n\n"

f"User-provided keywords: {data_dict['question']}\n\n"

"Text and / or tables:\n"

f"{formatted_texts}"

),

}

messages.append(text_message)

return [HumanMessage(content=messages)]

def multi_modal_rag_chain(retriever):

"""Multi-modal RAG chain"""

# Multi-modal LLM

llm_model = Ollama(

verbose=True, temperature=0.5, model="llava", base_url="http://localhost:11434"

)

# RAG pipeline

chain = (

{

"context": retriever | RunnableLambda(split_image_text_types),

"question": RunnablePassthrough(),

}

| RunnableLambda(prompt_func)

| llm_model

| StrOutputParser()

)

return chain

测试检索并运行 RAG

from IPython.display import HTML, display

def plt_img_base64(img_base64):

# Create an HTML img tag with the base64 string as the source

image_html = f'<img src="data:image/jpeg;base64,{img_base64}" />'

# Display the image by rendering the HTML

display(HTML(image_html))

query = "Woman with children"

docs = retriever.invoke(query, k=10)

for doc in docs:

if is_base64(doc.page_content):

plt_img_base64(doc.page_content)

else:

print(doc.page_content)

GREAT PHOTOGRAPHS

The subject of the photo, Florence Owens Thompson, a Cherokee from Oklahoma, initially regretted that Lange ever made this photograph. “She was a very strong woman. She was a leader,” her daughter Katherine later said. “I think that’s one of the reasons she resented the photo — because it didn’t show her in that light.”DOROTHEA LANGE. “DESTITUTE PEA PICKERS IN CALIFORNIA. MOTHER OF SEVEN

CHILDREN. AGE THIRTY-TWO. NIPOMO, CALIFORNIA.” MARCH 1936. NITRATE

NEGATIVE. FARM SECURITY ADMINISTRATION-OFFICE OF WAR INFORMATION

COLLECTION. PRINTS AND PHOTOGRAPHS DIVISION.—Helena Zinkham

—Helena Zinkham

NOVEMBER/DECEMBER 2020 LOC.GOV/LCM

chain = multi_modal_rag_chain(retriever)

response = chain.invoke(query)

print(response)

1. Detailed description of the visual elements in the image: The image features a woman with children, likely a mother and her family, standing together outside. They appear to be poor or struggling financially, as indicated by their attire and surroundings.

2. Historical and cultural context of the image: The photo was taken in 1936 during the Great Depression, when many families struggled to make ends meet. Dorothea Lange, a renowned American photographer, took this iconic photograph that became an emblem of poverty and hardship experienced by many Americans at that time.

3. Interpretation of the image's symbolism and meaning: The image conveys a sense of unity and resilience despite adversity. The woman and her children are standing together, displaying their strength as a family unit in the face of economic challenges. The photograph also serves as a reminder of the importance of empathy and support for those who are struggling.

4. Connections between the image and the related text: The text provided offers additional context about the woman in the photo, her background, and her feelings towards the photograph. It highlights the historical backdrop of the Great Depression and emphasizes the significance of this particular image as a representation of that time period.

! docker kill vdms_rag_nb

vdms_rag_nb

总结

本文介绍了如何在检索-生成(RAG)应用中结合使用多模态大型语言模型(LLMs),如GPT-4V,来处理包含文本和图像的混合文档。文章首先强调了在RAG中整合图像信息的重要性,并提出了使用非结构化工具来解析PDF中的图像、文本和表格的方法。接着,介绍了如何利用多模态嵌入(例如CLIP)和VDMS作为矢量存储来嵌入和检索图像和文本。文章还提供了详细的代码示例,包括如何启动VDMS服务器、加载数据、创建多模态嵌入、构建RAG链,以及如何测试检索和RAG链的运行。最后,文章通过一个具体的例子演示了如何使用RAG链来分析和解释图像,并提供了对图像的详细描述、历史和文化背景、象征意义的解释,以及图像与相关文本之间的联系。

扩展知识:

多模态LLMs:能够同时处理和生成图像和文本,适用于需要理解多种数据类型的应用场景。

RAG模型:结合了检索系统和生成模型,能够从大量文档中检索相关信息并生成答案。

VDMS:一种矢量数据库管理系统,支持多模态数据的存储和检索。

CLIP模型:由OpenAI开发,能够将图像和文本映射到共同的嵌入空间,用于图像和文本的联合检索。

非结构化数据处理:指对PDF等文档进行解析,提取其中的文本、图像和表格等信息,为后续处理提供结构化数据。